cocotb is a test framework, enabling users to test their Verilog or VHDL designs using Python-based testbenches. But at the same time, cocotb is also a piece of software that needs testing! As of today, this testing got even better: we are now running all tests against the exact release binaries we’re uploading to PyPi, including our tests against proprietary simulators such as Riviera-PRO, Questa, or Xcelium. At the same time, cocotb developers can be more productive, as the latency for pull request checks reduced from over an hour to around 15 minutes.

This extended testing is exciting news for our cocotb users, who can enjoy even more rock-solid cocotb releases. And it’s exciting news for the free and open source silicon community as a whole: cocotb has worked hard to earn a place in the heart of thousands of verification engineers by being reliable and yet fun to work with. With our new CI system, we continue to push the boundaries of what’s possible in open source EDA and addressing both the technical as well as the non-technical issues along the way.

So what did we do, and why did we do it? Read on to learn more.

cocotb comes with an extensive test suite. The tests ensure that we don’t accidentially break existing functionalty when we add new features. But arguably even more important is our test suite for compatibility testing with the various simulators cocotb supports (12 and counting!).

Testing against open source simulators is relatively easy: just download and install the simulator, and run the test suite. This approach works well for manual testing, and equally well in continuous integration (aka CI, or automated testing).

With proprietary simulators, things get a little more tricky: we have to somehow obtain the software and associated licenses, install them in a secure location (to meet the licensing agreements), and then run the tests there.

Over the years, the cocotb project (through the FOSSi Foundation) has been partnering with Aldec, Cadence, and Siemens to get access to Riviera-PRO, Xcelium, and Questa. We put those tools to use in our “Private CI” setup, a single machine which used Azure Pipelines to execute tests when a new pull request was opened.

This setup worked well over the years, but didn’t scale: all tests were serialized, and the more tests we added, or the more demand there was for testing, the longer the end-to-end test latencies got. With each test suite run against a single simulator taking between 10 and 15 minutes, latencies quickly added up to multiple hours. Or in other words: developers had to wait multiple hours before they could see the results of the test runs, and merge their changes. Furthermore, all tests ran on the same machine, which could lead to test artifacts from the previous run influencing the next run.

Over the years, we learned to live with those limitations. But as we kept adding more and more tests and wanted to run those against more and more simulators, the setup showed its limitations. And that’s where the journey started to where we are today!

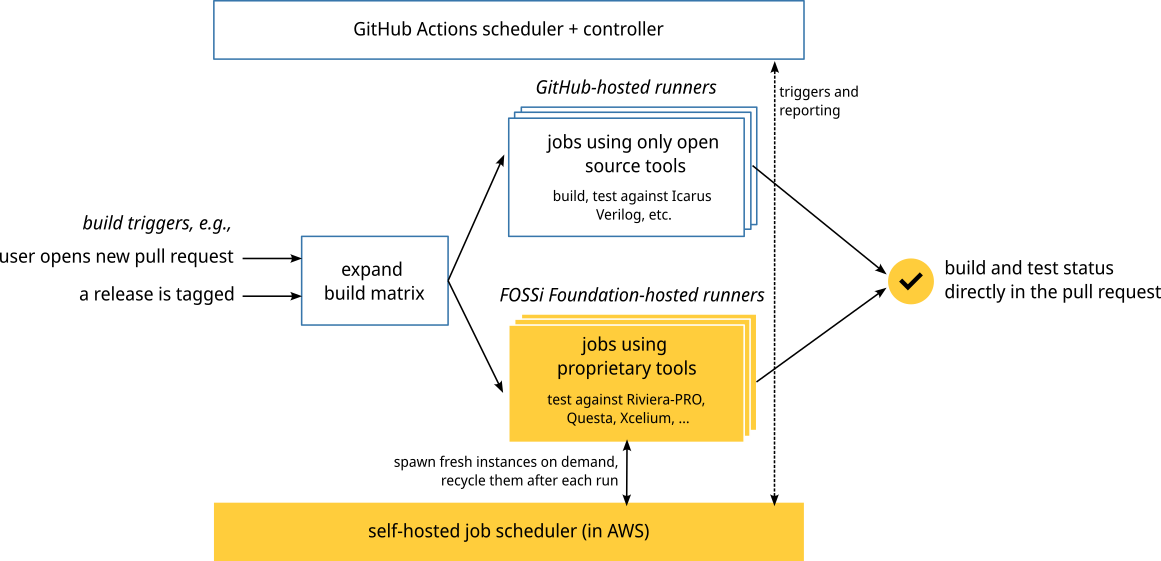

Without further ado, this picture shows how the setup looks today:

In our new setup, we’re only using GitHub Actions to manage CI jobs. We started with a (reasonably standard) setup, which makes use of free GitHub Actions-provided runners (i.e., machines created on demand to execute tests). That’s how we continue to run build and test jobs which only rely on open source tools.

To also run tests against proprietary tools, we extended this setup with into our own infrastructure. Using the excellent Terraform module for scalable self hosted GitHub action runners we were able to create a setup in Amazon Web Services (AWS) that hosts all proprietary tools in a secure way, and makes them available to run tests against them.

Whenever a new pull request is opened, the GitHub Actions scheduler will let our infrastructure know, which then spawns a fresh AWS EC2 instance with access to all necessary tools. After configuring itself, the instance registers with GitHub Actions and is assigned one of the test jobs. After the job completes, the test result is reported to GitHub Actions, and the instance is terminated.

With this setup, we’re now able to scale our compute nodes up to the maximum amount of licenses we have available. And if there are no tests to be executed, we scale down to zero, i.e., the whole infrastructure is only paid for if there is actual demand for it.

But there’s more: Now that all testing is happening in GitHub Actions, we are able to test the release binaries right after they are built, but before they are uploaded to PyPi. This ensures that we actually test the exact same binaries that we release later on, and prevent releases from happening which don’t pass this quality bar – all without human intervention.

We as cocotb project are thankful to the FOSSi Foundation and their sponsors for their financial support (after all, we have to pay AWS!). We also would like to thank our EDA tool partners, Aldec, Cadence, and Siemens, for their ongoing support, which made testing cocotb with proprietary simulators possible in the first place!

cocotb is a volunteer-driven open source project under the umbrella of the FOSSi Foundation. Please reach out to Philipp at philipp@fossi-foundation.org if you or your company want to support cocotb, either financially to help pay for costs such as running our continuous integration setup, or with in-kind donations, such as simulator licenses.

If you’d like to learn more about cocotb, have a look at www.cocotb.org for ways to get started.

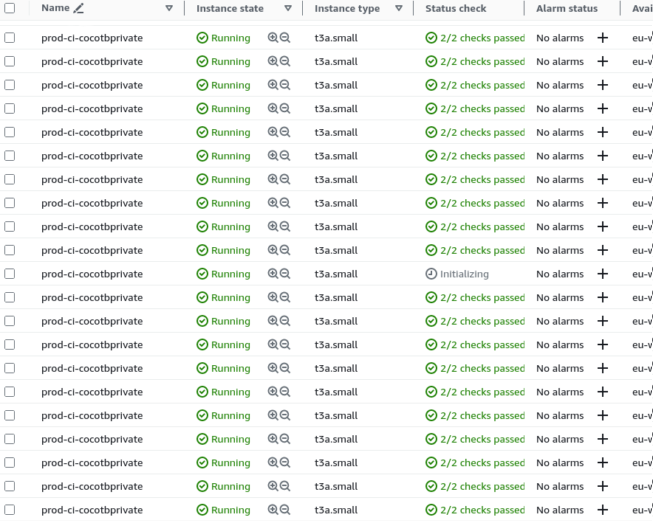

To close with a picture, here’s the AWS EC2 console showing many parallel tests being run on individual test runners: